Mastering Q-Learning: The Backbone of Reinforcement Learning Algorithms 🤖💡

If you’ve ever wondered how machines make decisions in an unknown environment, Q-Learning is the algorithm you need to know about!

As one of the most fundamental and widely used reinforcement learning methods, Q-Learning forms the building block for many advanced AI systems today.

Let’s delve into the details of how it works, its strengths, and where it’s headed in the ever-evolving world of AI. 🌟

What is Q-Learning? 🧐

Q-Learning is a model-free reinforcement learning algorithm that enables an agent to learn how to act optimally in a given environment.

The goal is simple: maximize the total reward over time by learning the best actions to take in each situation.

Imagine you’re teaching a robot to navigate a maze.

It starts by wandering aimlessly but gradually learns the most efficient path by remembering which actions yield the best rewards.

That memory?

It’s stored in the Q-table, the heart of Q-Learning.

The Magic Behind the Algorithm 🪄

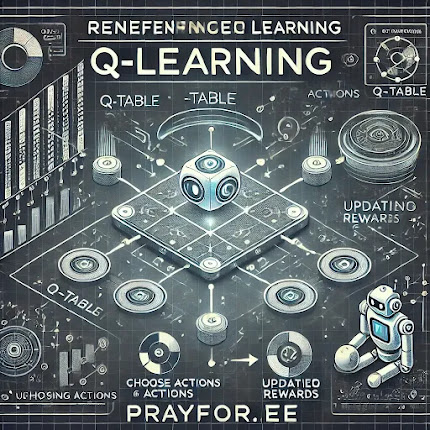

Here’s a step-by-step breakdown of how Q-Learning works:

1️⃣ Initialization:

- Create a Q-table, where rows represent states, and columns represent actions.

- Initialize all Q-values to zero (or a small random number).

2️⃣ Interaction:

- At each time step, the agent observes its current state and chooses an action using a policy (e.g., ε-greedy).

- Exploration: The agent tries random actions to discover new possibilities.

- Exploitation: The agent chooses the action with the highest known Q-value.

3️⃣ Feedback:

- The agent executes the action and receives a reward from the environment.

- It also observes the next state it transitions to.

4️⃣ Update Rule:

- Update the Q-value for the current state-action pair using the Bellman Equation:

- α (Learning Rate): Determines how much new information overrides the old.

- γ (Discount Factor): Balances immediate and future rewards.

5️⃣ Repeat:

- Continue updating the Q-table until it converges to the optimal policy.

Key Advantages of Q-Learning 🏆

- Model-Free:Q-Learning doesn’t require prior knowledge of the environment, making it versatile for real-world applications.

- Simplicity:Easy to implement and understand, Q-Learning is a favorite for teaching RL concepts.

- Guaranteed Convergence:With proper settings, it guarantees finding the optimal policy for any finite Markov Decision Process (MDP).

Real-World Applications 🌍

1. Gaming 🎮

From teaching AI to play Pac-Man to mastering complex board games, Q-Learning has laid the foundation for modern game-playing algorithms.

2. Robotics 🤖

Robots use Q-Learning to navigate unknown environments, avoid obstacles, and learn new tasks.

3. Autonomous Systems 🚗

Q-Learning helps in training AI for self-driving cars, teaching them to make decisions like lane switching and obstacle avoidance.

Challenges of Q-Learning ⚠️

- Scalability Issues:The Q-table grows exponentially with the size of the state-action space, making it impractical for complex environments.

- Exploration-Exploitation Dilemma:Striking the right balance between trying new actions and sticking to known rewards can be tricky.

- Sensitivity to Hyperparameters:Learning rate and discount factor need careful tuning for optimal results.

Q-Learning vs. Modern RL Algorithms 🔄

While Q-Learning was a revolutionary step forward, it’s been surpassed in many applications by algorithms like Deep Q-Networks (DQN), which replace Q-tables with neural networks to handle complex, high-dimensional problems.

However, Q-Learning remains a cornerstone for understanding RL and is still effective in simpler scenarios.

Why You Should Care 🌟

Q-Learning may seem like a simple concept, but its implications are profound.

Whether you’re a student diving into AI or a professional exploring new tech, mastering Q-Learning gives you a solid foundation for tackling advanced RL problems.

It’s the first step in teaching machines how to think and act like humans. 🤖💡

No comments:

Post a Comment