Proximal Policy Optimization (PPO): Reinforcement Learning’s Gold Standard 🌟🤖

When it comes to state-of-the-art reinforcement learning algorithms, Proximal Policy Optimization (PPO) is a name you’re bound to encounter.

Created by OpenAI in 2017, PPO strikes the perfect balance between performance and simplicity, making it a favorite for tackling real-world AI challenges.

Let’s dive deep into what makes PPO the superstar of reinforcement learning! 🚀

What is PPO? 🤔

PPO is a policy gradient algorithm that simplifies and improves upon its predecessors like Trust Region Policy Optimization (TRPO).

It optimizes policies by maximizing a clipped objective function, ensuring stability and preventing drastic updates that could destabilize training.

Think of PPO as the disciplined version of policy optimization, it takes big steps but stays cautious. 😎

How PPO Works: Breaking It Down 🛠️

1️⃣ Policy Gradient Basics

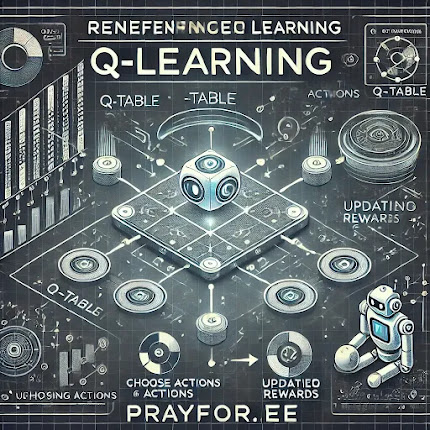

PPO builds on the concept of policy gradients, where the policy (decision-making strategy) is directly optimized to maximize rewards. This differs from value-based methods like Q-learning, which focus on estimating the value of actions.

2️⃣ The Clipped Objective

The highlight of PPO is its clipped objective function, which prevents the policy from changing too much during each update. This is done by clipping the probability ratio between the new policy and the old policy:

The clipping ensures the updates stay within a safe range, avoiding overcorrections that could destabilize training.

3️⃣ Surrogate Objective

PPO also uses a surrogate objective function to balance exploration and exploitation.

It updates policies iteratively, making small, stable improvements over time.

4️⃣ Multi-threaded Environments

Like A3C, PPO supports parallel training, where multiple agents explore different environments and share their experiences, speeding up convergence. 🌍

Key Features of PPO 🔑

1. Stability Without Complexity

PPO achieves the stability of algorithms like TRPO without their computational overhead. No second-order derivatives or line searches are needed!

2. Versatility

PPO works seamlessly in both discrete and continuous action spaces, making it ideal for a wide range of tasks.

3. Sample Efficiency

While not as sample-efficient as off-policy methods (e.g., DDPG), PPO strikes a good balance between efficiency and simplicity.

Applications of PPO 🌟

1. Robotics 🤖

PPO is widely used in training robots to perform tasks like walking, grasping, and navigating dynamic environments.

2. Gaming 🎮

From mastering Atari games to excelling in complex 3D environments, PPO has been a go-to for game-playing agents.

3. Simulations 🌍

PPO powers simulations in industries like healthcare, finance, and supply chain optimization.

PPO vs. Other Algorithms 🥊

Strengths and Limitations of PPO ⚖️

Strengths

- Stable Learning: The clipped objective prevents wild updates.

- Scalability: Works well with multi-threaded environments.

- Easy to Implement: Relatively simple compared to TRPO or SAC.

Limitations

- Sample Inefficiency: Requires more samples compared to off-policy algorithms.

- Hyperparameter Sensitivity: Performance depends on tuning parameters like clipping range and learning rate.

Why PPO is a Game-Changer 🚀

Since its introduction, PPO has been adopted across industries for its simplicity, stability, and versatility.

OpenAI themselves have used PPO to train agents in tasks ranging from robotic manipulation to competitive gaming environments like Dota 2.

Final Thoughts 🌟

Proximal Policy Optimization (PPO) strikes the perfect balance between simplicity and effectiveness, making it a favorite for researchers and practitioners alike.

Whether you’re training robots, optimizing supply chains, or developing AI for gaming, PPO is a powerful tool in your RL arsenal.

Ready to take your AI projects to the next level?

Dive into PPO today! 🤖💡

%20in%20reinforcement%20learning.%20The%20image%20features%20a%20robotic%20agent%20balancing%20on%20a%20tightrop.webp)

%20in%20reinforcement%20learning.%20The%20image%20includes%20multiple%20agents%20learning%20in%20.webp)

%20in%20reinforcement%20learning.%20The%20image%20shows%20a%20neural%20network%20connected%20to%20a%20Q-table,%20processing%20.webp)

,%20Dee.webp)

%20and%20Large%20Language%20Models%20(LLMs).%20The%20image%20features%20a.webp)