Demystifying Deep Q-Networks (DQN): How AI Masters Games and Beyond 🎮🤖

If you’ve ever been amazed by an AI beating human players in Atari games or performing complex tasks, chances are Deep Q-Networks (DQN) were at work.

Introduced by DeepMind in 2013, DQN revolutionized reinforcement learning by combining Q-learning with the power of deep neural networks.

Let’s unpack what makes DQN so impactful and explore its inner workings in detail! 🚀

What is a Deep Q-Network (DQN)? 🤔

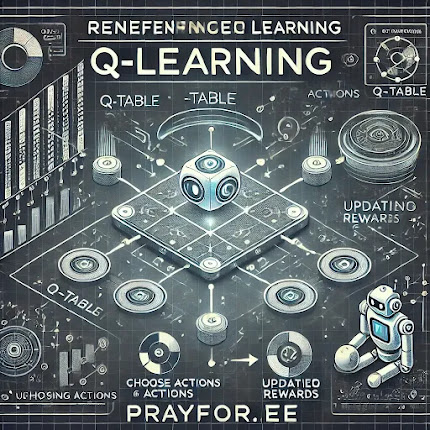

At its core, DQN is an extension of Q-learning, designed to handle environments with high-dimensional state spaces, such as images or videos.

Instead of using a traditional Q-table to store state-action values, DQN leverages a deep neural network to approximate the Q-values, making it scalable and efficient for complex tasks.

Why It Matters

Before DQN, reinforcement learning struggled with tasks involving large or continuous state spaces.

DQN bridged this gap, making it possible for AI to excel in environments like Atari games, where states are represented as raw pixel inputs.

How DQN Works: A Step-by-Step Guide 🛠️

1️⃣ Input Representation

The input to the DQN is a high-dimensional state, such as a frame from a video game. To improve decision-making, DQN often stacks several consecutive frames to capture motion.

2️⃣ Neural Network Architecture

A convolutional neural network (CNN) is used to process the input.

- Convolution Layers: Extract spatial features from the input.

- Fully Connected Layers: Map the extracted features to Q-values for each action.

3️⃣ Output

The output of the network is a vector of Q-values, where each element corresponds to the expected reward of an action given the current state.

4️⃣ Training the Network

DQN uses a modified version of the Q-learning update rule:

However, instead of directly updating a Q-table, the network parameters (weights) are optimized to minimize the loss function:

Where:

Key Innovations of DQN 🔬

1. Experience Replay

Instead of updating the network with consecutive samples, DQN stores experiences (state, action, reward, next state) in a replay buffer. Randomly sampling from this buffer helps:

- Break correlation between samples

- Stabilize training

2. Target Network

A separate target network, with fixed weights, is used to calculate the target Q-values. This reduces instability caused by rapidly changing Q-values.

3. ε-Greedy Policy

To balance exploration and exploitation, DQN uses an ε-greedy strategy:

- With probability ε, take a random action (explore).

- Otherwise, choose the action with the highest Q-value (exploit).

Applications of DQN 🌍

1. Gaming 🎮

- Atari Games: DQN achieved human-level performance in games like Pong and Breakout.

- Complex Games: Variants of DQN have been applied to strategy games like StarCraft.

2. Robotics 🤖

DQN enables robots to learn tasks like object manipulation and navigation.

3. Autonomous Systems 🚗

DQN powers decision-making in environments with dynamic and complex state spaces, such as self-driving cars.

Strengths and Limitations of DQN 🏆⚠️

Strengths

- Handles high-dimensional inputs, such as images.

- Introduced techniques like experience replay and target networks, improving stability.

- Generalizable to a variety of tasks.

Limitations

- Sample Inefficiency: Requires a large number of interactions with the environment to learn effectively.

- High Computational Cost: Training a DQN can be resource-intensive.

- Overestimation Bias: Prone to overestimating Q-values, which can lead to suboptimal policies.

DQN vs. Advanced Algorithms 🥊

While DQN was groundbreaking, newer algorithms like Double DQN, Dueling DQN, and Proximal Policy Optimization (PPO) have addressed some of its limitations.

These advanced methods are more sample-efficient and better suited for continuous action spaces.

Why DQN Was a Game-Changer 🚀

DQN didn’t just improve reinforcement learning, it made RL accessible for solving real-world problems.

By combining deep learning with traditional RL methods, it paved the way for innovations in AI that we see today.

Final Thoughts 🌟

DQN represents a turning point in AI, showing us how machines can learn complex behaviors from raw data.

Whether it’s mastering a video game or navigating a robotic arm, DQN has proven its value across domains.

For anyone stepping into the world of AI, understanding DQN is essential, it’s not just an algorithm;

it’s a gateway to the future of intelligent systems.

%20in%20reinforcement%20learning.%20The%20image%20shows%20a%20neural%20network%20connected%20to%20a%20Q-table,%20processing%20.webp)

,%20Dee.webp)

%20and%20Large%20Language%20Models%20(LLMs).%20The%20image%20features%20a.webp)