How to Develop a Trading Bot with Reinforcement Learning: A Step-by-Step Guide

Imagine having a trading bot that learns and adapts to market trends all on its own. Sounds futuristic, right?

Well, thanks to reinforcement learning (RL), you can turn that vision into reality! Whether you’re a tech enthusiast or a finance junkie, this guide will show you how to create your own RL-powered trading bot.

Let’s get started! 🚀

Why Reinforcement Learning for Trading Bots? 🤖💰

Reinforcement learning is like training a virtual brain to make smart decisions.

Here’s why it’s perfect for building trading bots:

Learning Over Time: RL bots learn from their actions and improve with every trade.

Adapting to Markets: They’re great at handling dynamic market conditions.

Profit-Driven: By rewarding profitable decisions, RL bots aim to maximize your returns.

With RL, your bot isn’t just following pre-set rules; it’s evolving to outsmart the market! 🌟

Step 1: Master the Basics 🧠

Before diving in, let’s cover the essentials of RL:

Agent: This is your bot, the decision-maker.

Environment: The market it interacts with, like stocks or crypto.

State: Market data the bot observes (e.g., prices, volume).

Action: Decisions like buying, selling, or holding.

Reward: Profit or loss after each action.

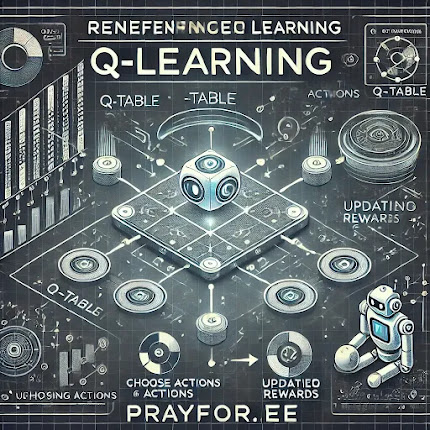

Pro Tip: Start by learning about RL algorithms like Q-Learning, Deep Q-Networks (DQN), or PPO (Proximal Policy Optimization).

They’re the magic behind your bot’s brain! 🪄

Step 2: Gather Market Data 📊

No data, no bot! Here’s what you need:

Historical Data: Stock prices, trading volume, and technical indicators.

Real-Time Data: APIs like Alpha Vantage, Binance, or Alpaca can provide live feeds.

Technical Features: Add insights like moving averages, Bollinger Bands, or RSI to enrich your data.

Remember, clean data = better results! Tools like Pandas make preprocessing a breeze. 🧹

Step 3: Build a Simulated Environment 🎮

Your bot needs a playground before entering the real market. Set up an environment with:

Market Conditions: Define what the bot observes (e.g., prices, trends).

Action Options: List the actions (buy, sell, hold).

Reward System: Make profits rewarding, but penalize risky moves.

You can use OpenAI Gym to create your custom trading environment. It’s like building a mini stock market for your bot to train in! 🏗️

Step 4: Train the Bot 🧑💻

Here’s where the magic happens:

Set Up Your Model: Use TensorFlow or PyTorch to build a neural network for your RL algorithm.

Train the Agent: Run simulations where the bot learns from its actions.

Evaluate Results: Test the bot on unseen data to measure accuracy.

Fine-Tune: Adjust settings like learning rates and reward functions to improve performance.

Patience is key here. Training can take hours or even days, but the results are worth it! ⏳

Step 5: Test in Real Markets 🚦

Once your bot is trained, it’s time to take it for a test drive:

Paper Trading: Simulate trades with real market data but no actual money.

Monitor Performance: Keep an eye on metrics like ROI (return on investment).

Optimize: Continuously retrain the bot to adapt to changing market conditions.

Start small and scale up as your bot proves its worth.

Think of it as teaching a toddler to walk before running a marathon! 🏃♂️

Challenges and Tips ⚠️

Building an RL trading bot isn’t all sunshine and rainbows. Here’s what to watch out for:

Volatile Markets: RL bots might struggle with sudden market crashes or booms.

Overfitting: Don’t let your bot memorize past data; focus on generalization.

Ethical Concerns: Make sure your bot complies with trading laws.

But don’t worry – every challenge is a learning opportunity! 💡

The Future of RL in Trading 🌐

Reinforcement learning is transforming trading.

From smarter strategies to real-time adaptation, the possibilities are endless.

With persistence and the right tools, your RL bot could revolutionize how you trade.

Are you ready to code your financial future? 💼

#AI #DL #ML #LLM #RL #Market #RLTradingBot #AITrading #ReinforcementLearning #FinanceTech #SmartInvesting

%20in%20reinforcement%20learning.%20The%20image%20features%20a%20robotic%20agent%20balancing%20on%20a%20tightrop.webp)

%20in%20reinforcement%20learning.%20The%20image%20includes%20multiple%20agents%20learning%20in%20.webp)

%20in%20reinforcement%20learning.%20The%20image%20shows%20a%20neural%20network%20connected%20to%20a%20Q-table,%20processing%20.webp)

,%20Dee.webp)